AI is helping internet bot herders with greater scale, lower costs, and more sophisticated evasion techniques.

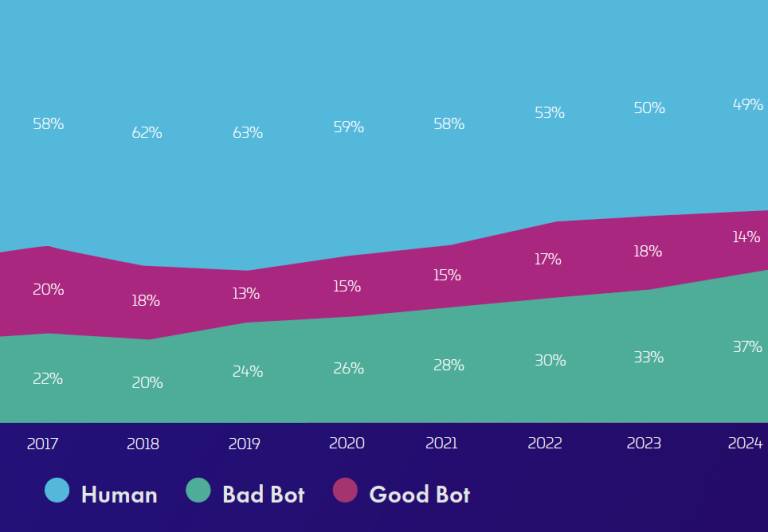

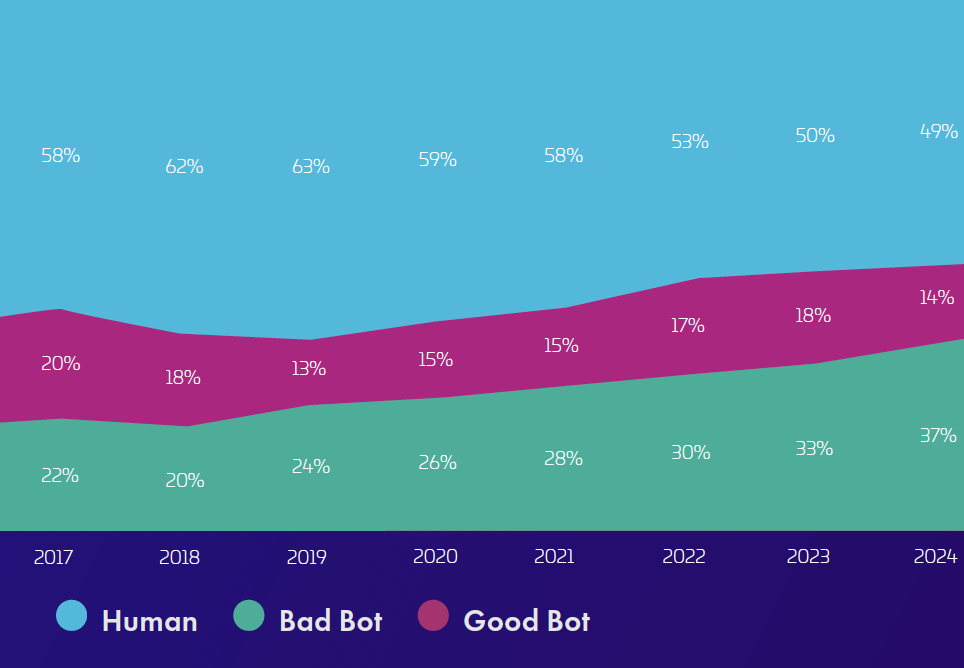

Bots on the internet now surpass human activity, with 51% of all internet traffic being automated (bot) traffic. Thirty-seven percent of this is malicious (bad bots), while only 14% are good bots. Much of the current expansion is fueled by criminal use of AI, which is likely to increase.

Within the bad bots there has been a noticeable growth in simple, but high volume bot attacks. This again shows the influence of AI, allowing less sophisticated actors to generate new bots, and use AI power to launch them. This follows the common trajectory of criminal use of AI: simple as the actors learn how to use their new capability, followed by more sophisticated use as their AI skills evolve. This shows the likely future of the bot threat: advanced bots being produced at the speed and delivery of simple bots. The bad bot threat will likely increase.

Imperva uses a basic classification of bots into ‘simple’ and advanced. Simple bots are easy to detect and to discover their source IP address. “They always look the same and are relatively easy to defend against,” says Tim Chang, global VP and GM of application security at Thales (which acquired Imperva in 2023). “But the advanced bots are more sophisticated and constantly change – the polymorphic bots.” These are more challenging, are harder to detect, and they cause more damage.

“Right now, it’s the simple attacks that have shown the most growth,” explains Chang, “As AI continues to develop and attackers get more comfortable with its use, so the evasion techniques will get more efficient, and the attacks themselves will become more sophisticated.”

Imperva’s annual report (PDF) on bad bots takes a deep dive into the current bot scene. Two trends emerge: a surge in the number of API bot attacks (44% of all advanced bots are targeted at APIs), and an increase in the number of account takeover (ATO) attacks (a 40% increase over the previous year).

The four most common API bot attacks focused on data scraping (31%), payment fraud (26%), account takeover (12%), and scalping (11%). The common factor across all is the exploitation of API vulnerabilities ranging from misconfigurations and insufficient rate limiting to weak authentication protocols.

The most common AI-assisted bots include ByteSpider Bot (responsible for 54% of all AI-enabled attacks), AppleBot (26%), Claude Bot (13%) and ChatGPT User Bot (6%). The success of the ByteSpider Bot comes from its widespread confusion with the legitimate ByteSpider web crawler operated by ByteDance (the TikTok firm) used to scrape internet content for training data for ByteDance’s LLM models.

The morality if not legality of this process is dubious under both GDPR and the AI Act – but ByteDance is not the only firm doing similar training for LLMs. While web crawlers can be easily prevented by company networks, defenders tend not to do so in fear of disrupting the genuinely good bots that might be beneficial. “Cybercriminals,” notes the report, “frequently disguise their malicious bots as web crawlers to evade detection and bypass security measures that whitelist known web crawlers.”

Underlying everything is the growing use of AI, effectively changing the RoI for the attackers. AI empowers the ‘zero-knowledge threat actor‘, it boosts code generation, and it scales the attacks. It enhances the polymorphic evasion techniques built into the bots and helps to refine the attacker’s tactics. “Bot operators now have AI to analyze where attacks succeed and where they fail,” continued Chang. “And they can use AI to adjust and refine the evasion techniques they build into their advanced bots.”

The influence of AI on the bot ecosphere will increase. We will see more attackers and more advanced attacks. To give an idea of the current scale, Imperva blocked around 13 trillion bot requests in 2024, finding around 2 million AI-enabled attacks per day. “They range from simple to very sophisticated,” said Chang. “But we expect things to change. The bots will evolve, and it will be even harder to detect the coming and increased use of advanced AI-assisted malicious bots.”

Related: Cyber Insights 2025: APIs – The Threat Continues

Related: API Security Matters: The Risks of Turning a Blind Eye

Related: Cyber Insights 2025: Artificial Intelligence

Related: Bringing Bots and Fraud to the Boardroom